- Home

- News and Events

- Commentary

- Mismatch between descriptions of training and infe...

Mismatch between descriptions of training and inference: Evaluation Framework for Predicted Trajectories – Apollo (T 606/21)

Decision T 606/21 Evaluation Framework for Predicted Trajectories – Apollo of 28 February 2023 relates to application EP 3436783 A1 (first application with filing date 22.06.2017).

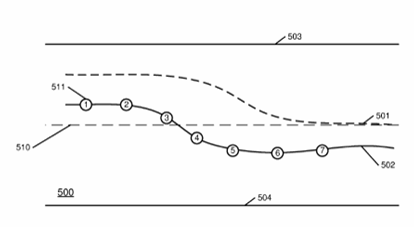

The application relates to predicting vehicular traffic in the context of autonomous vehicles. A control system for an autonomous car may comprise a prediction module that predicts the behaviour of other vehicles, such as a trajectory on which they will move in the next couple of seconds. The invention of this application relates to a deep neural network (DNN) trained to check, after the fact, how good the prediction was, i.e. how similar a predicted trajectory (502) is compared to the observed trajectory (501).

The Examining Division refused the application due to lack of sufficiency because it described

- a training phase where the DNN is trained on training input data comprising predicted and actual trajectories, and on an expected similarity score as a ground truth; and

- an inference phase where the DNN is supplied with a predicted trajectory and operated to generate a similarity score that indicates the similarity of the predicted trajectory to a possible actual trajectory where the object will likely move in the near future.

The Examining Division found that a neural network trained to determine a similarity between two known input data items (predicted and actual trajectory) cannot possibly be used to determine, based on a predicted trajectory alone, a similarity of the predicted trajectory and an actual trajectory that is not known yet, but will only become measurable in the future.

The applicant appealed.

Claim 1 according to the main request in appeal proceedings contains the training phase and the inference phase (indications added) and reads as follows:

A computer-implemented method for evaluating predictions of trajectories by autonomous driving vehicles, characterized in that the method comprises:

[training phase:] generating a Deep Neural Network, DNN, model, comprising:

- receiving (701) a first predicted trajectory of an object that was predicted based on first perception data of the object;

- extracting (702) predicted features from the first predicted trajectory;

- receiving (703) an actual trajectory that the object actually travelled;

- extracting (704) actual features of the actual trajectory for at least some trajectory points selected from a plurality of trajectory points of the actual trajectory, wherein both the extracted predicted features and the extracted actual features comprise a set of physical attributes and a set of trajectory related attributes, the physical attributes including a relative heading direction and a speed of the trajectory point; and

- training and generating (705) the DNN model based on the predicted features and the actual features to generate a first similarity score representing a similarity between the first predicted trajectory and the actual trajectory, wherein the first similarity score represents a similarity, modeled by the DNN model, between the known predicted trajectory and the known actual trajectory, the first similarity score closer to a first predetermined value indicating the predicted trajectory is similar to the corresponding known actual trajectory, and the first similarity score closer to a second predetermined value indicating the predicted trajectory being dissimilar to the corresponding known actual trajectory;

- comparing the first similarity score with an expected similarity score; and

- in response to the generated first similarity score being not within the expected similarity score, iteratively training the DNN model until the generated first similarity score is within the expected similarity score;

[inference phase:] receiving (801) a second predicted trajectory of the object that was generated using a prediction method based on second perception data perceiving the object within a driving environment surrounding an Autonomous Driving Vehicle, ADV;

for at least some trajectory points selected from a plurality of trajectory points of the second predicted trajectory, extracting (802) a plurality of features from the selected trajectory points, wherein the extracted features of each trajectory point comprise a set of physical attributes and a set of trajectory related attributes, the physical attributes including a relative heading direction and a speed of the trajectory point;

applying (803) the trained DNN model to the extracted features from the selected trajectory points of the second predicted trajectory to generate a second similarity score, wherein the second similarity score indicates whether the second predicted trajectory is more likely close to an actual trajectory, the object likely moves in the near future; and

determining (804) an accuracy of the prediction method based on the second similarity score.

The Board dismissed the appeal. It noted – in agreement with the Examining Division – that the DNN is only trained to receive actual and predicted trajectories and determine a similarity between them. However, the Board had two objections concerning the inference phase:

- The second similarity score indicates “whether the second predicted trajectory is more likely close to an actual trajectory”, i.e. a likelihood rather than a similarity (which the similarity score indicates in the training phase). Likelihood and similarity are different concepts.

- The Board did not see how the DNN can generate, based on a second predicted trajectory, a second similarity score if it receives only the second predicted trajectory as an input, whereas claim 1 does not define where the (unspecified) actual trajectory comes from.

Comment

The application suffered from a problem incurred at the drafting stage and could not be salvaged later. The problem was that the descriptions of the training stage and the inference stage were incompatible. The training stage clearly led to a neural network able to compare two input datasets and determine a similarity between them. That means that the weights stored in the network contain information on how to determine the similarity. The inference phase suggested using this network to do something different, namely predicting one of the datasets based on the other. The training could not have enabled the neural network to do that.

However, the objection that claim 1 should have defined where the actual trajectory comes from seems a bit over the top: It is arguable that the claim could have been sufficiently disclosed if that information had been indicated in the description. However, what makes the claim by itself problematic is that it states that the “actual trajectory” indicates where “the object likely moves in the near future” which seems to mean that the actual trajectory cannot be known at the time of prediction.

The decision is a reminder that different parts of the application relating to different phases of use of the AI (training and inference) should be made compatible at the drafting stage.

Another point worth mentioning is that the combination of training and inference phase leads to a narrower claim and can in principle be avoided by drafting parallel independent claims. The addition of the training phase into the claim was in this case of no help regarding sufficiency.

A US patent US11545033B2 was granted on a parallel application without any description, enablement, or best mode objections.

Other News